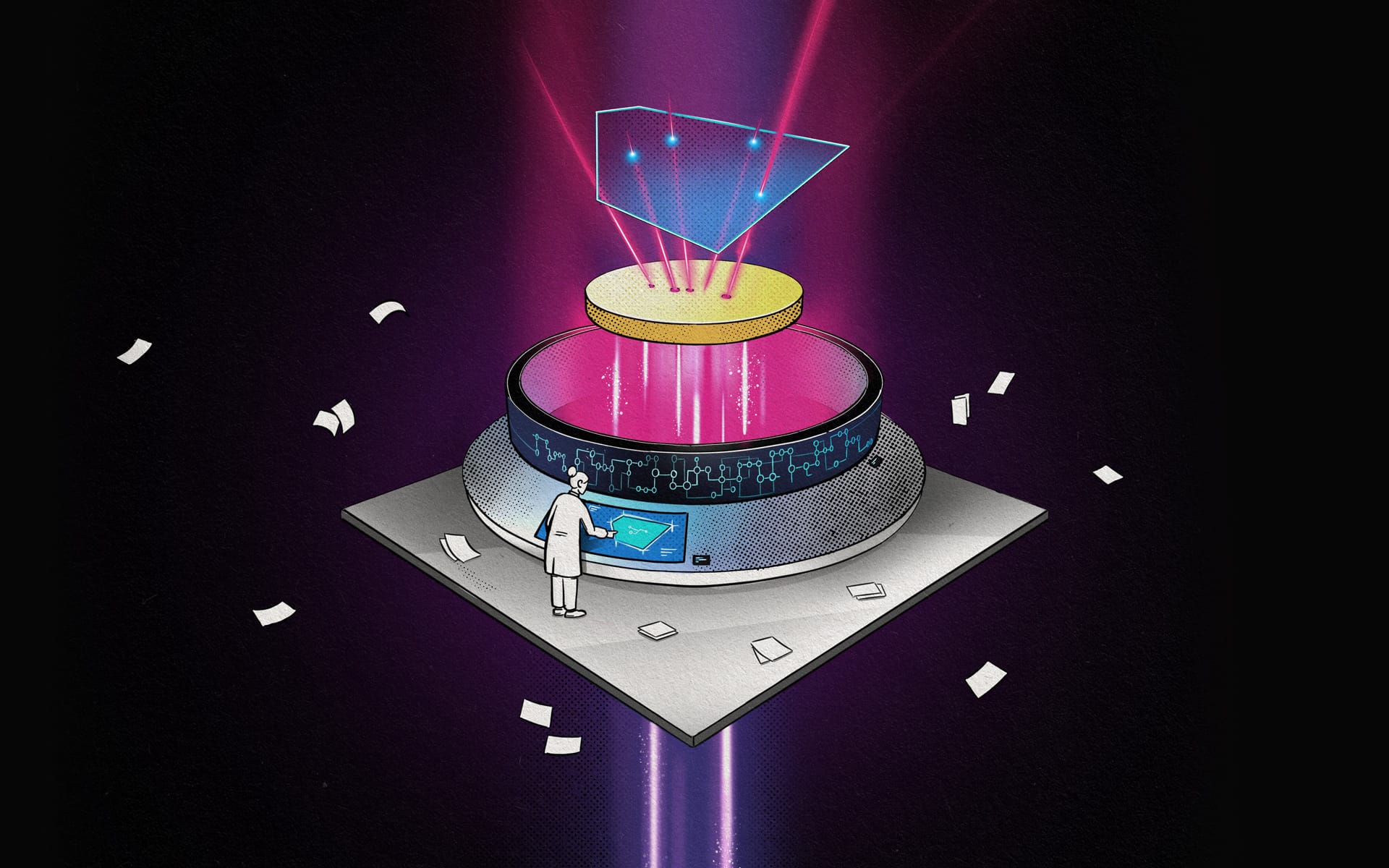

Particle accelerators like CERN have long been at the forefront of innovation, driving advancements in machine learning and data processing. These facilities tackle the immense challenge of managing and analyzing colossal amounts of data, leading to the development of sophisticated algorithms and technologies. Today, synchrotrons – specialized particle accelerators that produce high-intensity X-ray radiation – are entering a similar “CERN moment”, with data production reaching unprecedented levels.

Synchrotrons such as the ESRF in Grenoble and PETRA III in Hamburg play a crucial role in supporting an extensive range of scientific disciplines, including materials science, biology, environmental science, and even archaeology. Their high-intensity X-rays are essential for studying molecular structures, developing nanomaterials, understanding protein structures and complex biological systems, and uncovering historical artifacts.

Aerial shot of the European Synchrotron Radiation Facility (ESRF) in Grenoble, France. © ESRF / P.JAYET

Recent innovations in synchrotron facilities are exponentially improving the characteristics of the produced X-ray radiation, opening vast opportunities across scientific applications. However, this process also presents unique challenges for machine learning algorithms. As a result, these challenges are driving innovation in ML to address the increasing volume and intricacy of data. Such innovation is essential to fully harness the potential of synchrotron research and its wide-ranging benefits.

Dynamic nature of applied experimental science

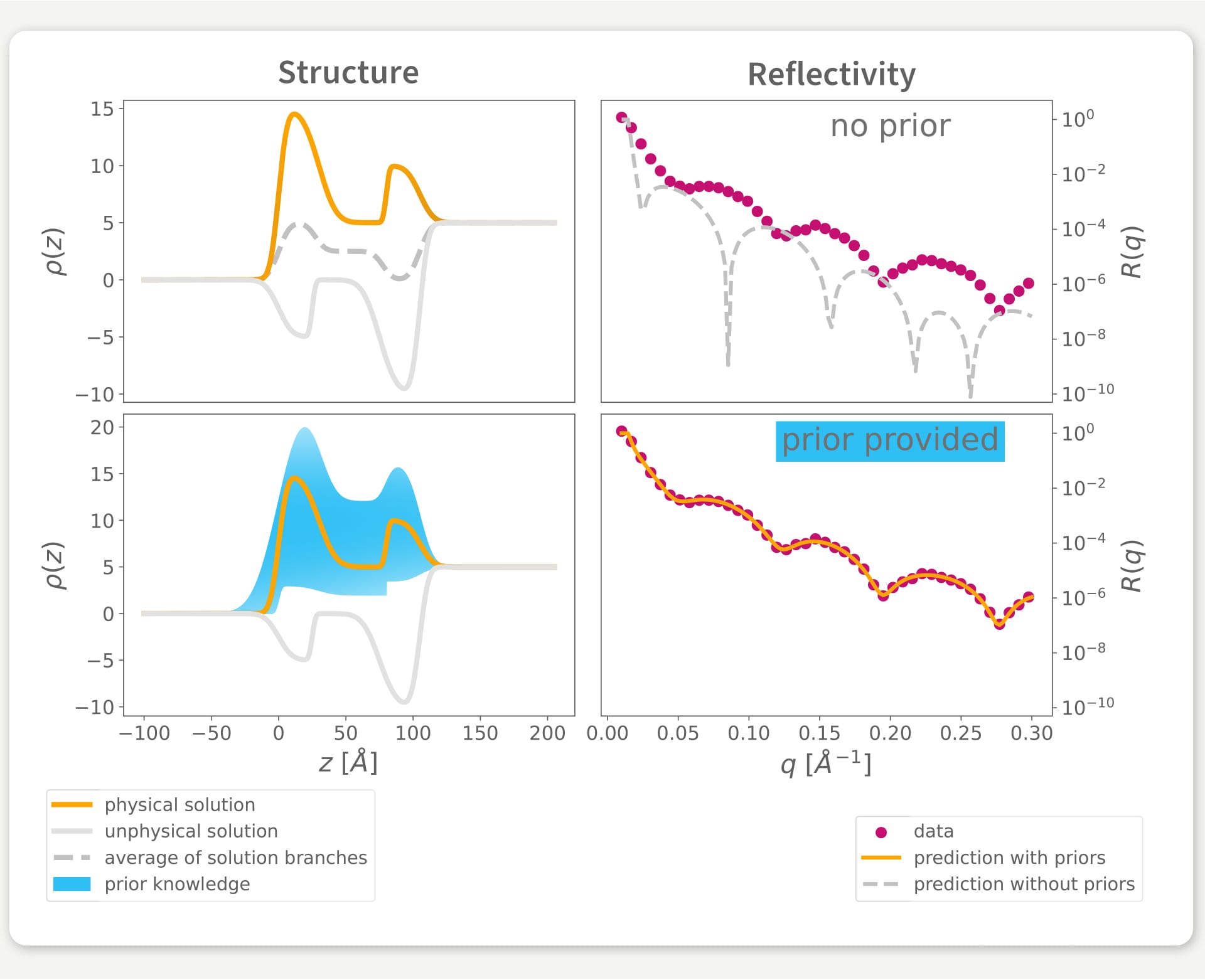

One significant challenge for research at synchrotrons is the diverse nature of the experimental environments employed by visiting scientists. A single scattering technique, like X-ray (or neutron) reflectometry, can study a wide range of systems, from semiconductors and organic solar cells to biological membranes. These systems are often thin structures composed of different layers, with thicknesses ranging from several atoms to micrometers. As an experimental technique, reflectometry can provide encoded information about these layered structures in the form of specific interference patterns. Yet, decoding these patterns to understand the actual structure of the system is a complex problem. Successful analysis requires prior knowledge about the structure, such as layer materials, the manufacturing process, or previous studies. In addition, a conventional analysis of reflectometry data can take hours of work to decode a single structure.

In our group, we are developing neural networks to decode structures from reflectometry data in milliseconds. Five years ago, we published the very first approach to neural network-based reflectometry analysis. Our solution was indeed superior in speed compared to experimentalist analyses, but it could not compete in accuracy. The additional, prior knowledge about the system was a key missing ingredient for the neural network, as it treated each sample in the exact same way.

In our recent work, we have overcome this limitation. Now, an experimentalist can inform the neural network about the approximate properties of the materials and the interfaces of a particular structure, greatly decreasing the level of uncertainty and improving analysis accuracy. This key addition turns the neural network from a fast but inaccurate replacement for a human into a useful tool that researchers can routinely employ. Importantly, the incorporation of dynamic prior knowledge makes it possible to reuse the same model without retraining for very different experimental scenarios and with different applications – a key practical requirement that is very common in applied experimental science.

Fig. 1: Prior knowledge helps the neural network in reconstructing the electron density profile of a physical structure (lefthand side) from the measured data (righthand side). © VALENTIN MUNTEANU / VLADIMIR STAROSTIN

Autonomous online experiments

Not only does our solution accelerate analysis, it also enables new types of experiments that would be unimaginable without machine learning.

A typical experimental scenario involves studying a dynamic process in real time by periodically taking “snapshots” of the system using reflectometry. In multiple applications it would be desirable to adjust experimental conditions such as temperature based on emerging data. But this requires the reflectometry data to be decoded in real time.

This is where our solution comes into play. Our neural network can be supplied with a set of rules that tell it how to adjust the experimental conditions depending on the observed behavior of the system. Furthermore, by looking at previous moments in time, the neural network can update its prior knowledge, completely eliminating the need for manual input. This results in a fully autonomous, closed-loop system that we have already successfully battle-tested at a synchrotron facility.

In this way, our solution opens up new perspectives for reflectometry and applications that rely on it. By employing a neural network and supplying it with relevant insights, researchers can now process reflectometry data almost instantly, shifting the focus from tedious data analysis to scientific questions and newly available types of experiments.

Original Publication: V. Munteanu, V. Starostin, A. Greco, L. Pithan, A. Gerlach, A. Hinderhofer, S. Kowarik, and F. Schreiber. Neural network analysis of neutron and X-ray reflectivity data incorporating prior knowledge. J. Appl. Cryst. 57 (2024) 456.

Cover illustration: Franz-Georg Stämmele

Knowledge Tracing for Life-long Personalized Learning

Comments